Welcome

I'm Scott and I'm a software engineer based in Suffolk in the UK (for now). I should start off by explaining why

I'm blogging. The main goal around this is to form a timeline of topics that I was either learning or was interested in. If I was able

to post every 2 weeks for example I could look back through ~42 of my posts and see what I was up to. That's really valuable to me.

I could do this all privately of course but the pressure of someone else potentially reading a post makes me think more about

what I'm writing. I've often struggled communicating my thoughts clearly, writing a blog post really forces me to organise things in my head

a lot more which I hope will improve my communication skills in general.

The other benefit of it being public is that I get to share my knowledge with others which I don't get to do

enough day to day unfortunately. Hopefully, you find something useful here.

Not all of my posts will provide the complete picture; I really don't want to end up refining drafts of blog posts for eternity until I

have the courage to hit the 'publish' button. I want to share my opinions or thoughts even though they might need further

refinement so bear with me on that.

Posts

The further I’ve gone my career in software development the more I’ve recognised that a large part of our job is entirely about managing risk. There are two distinct strategies for dealing with these risks. One camp prefers to be cautious and avoid/restrict change as much as possible while the other camp expects change and uses practices and tools to allow those changes to occur as easily as possible.

Both strategies acknowledge the risks but deal with them differently. The first fear the risks and minimises them by minimising the changes. I was in a software safety certification meeting last week with an accreditation body and the recommendations they provide come from a document written in 1990. Every minor change incurred a huge waterfall-like process with reports and handovers between each stage - coding did not start until several layers of approval.

I haven’t blogged in a very long time and the half finished state of my last post is annoying as I’ve lost my thread of where that was going but I might go back to it. World of Warships has destroyed that lovely routine of blog posting.

Anyway, here’s a small agile rant:

Imagine going through a load of features a client wants for an hour and then your team is asked how much of this do they promise to do in the next 2 weeks?

Some of us like to split up software into various little pieces, while others of us like to create larger files and keep it all together in a single file. Two different styles of programming that don’t fit well together. I find other programmers who like big chunks find my code hard to understand, while I find code in larger chunks more difficult to understand.

But everyone does break up their software (of significant size, anyway) into chunks; I’m disregarding those who write an entire piece of software in a single file as that’s more of an edge case. This is the first difference: the size of the chunks. But there’s another difference too.

The practice of TDD is a handy design tool, especially around the levels of classes and methods within those classes. It’s only useful in this way if you’re actively listening to your tests, however.

The most obvious piece of feedback is whether or not your code works, but there’s far more feedback you get when writing a test than this.

Here’s a quick list of things that come to mind when I’m writing tests:

I keep coming across old books around software engineering that are hard to find online, and hard to find as ebooks too. I’ve tried to find a copy of ‘Structured Design - Fundamentals of a Discipline of Computer Program and Systems Design’ recently. It’s possible to get a used copy for £30 or so if you’re willing to wait, Amazon sells this book for a whopping £70. It was published in 1979 which blows my mind. I haven’t read it as it only got delivered today but I’ve had a flick through it. While the tech and the tools we use to get the job done change massively, the core principles behind software architecture really don’t seem to change a great deal at all.

If you follow dev twitter, you would have seen yet again further outrage over ‘Uncle Bob’ Martin, who was ‘de-platformed’ from a conference recently. I’ve posted similarly about this before when the John Sonmez saga played out. I focused on the argument regarding consequences for speech back then and how they’re equivalent to threats for anyone thinking of speaking out similar ideas. People also like to call it accountability, as Thomas Chatteron Williams writes: “being held to account implies an opportunity to explain oneself” - when have you seen a mob relent and give anyone that opportunity?

I wrote a post about dependency injection overuse back in April 2019 and I ended it with:

Dependencies don’t always need to or should be injected, sometimes using the new keyword is okay. Knowing what to inject and what not to inject is something I can’t quite define concretely right now. What I am certain of is that not every class you create needs the suffix ‘Service/Helper’ or needs to be registered in your container as a singleton each and every time.

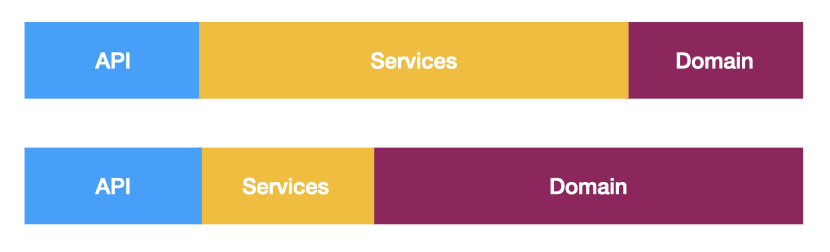

Most of my posts around clean code until now have been focussed on around the object level of design. One thing that is often abandoned is the use of modules, I’m guilty of it too. I remember at my first job creating modules was a real pain and was always something I wanted to avoid as it’d cause weird generic errors in our system that you’d spend hours trying to fix. That was a bit of an edge case; creating a module most of the time is just a case of clicking a few buttons in your IDE.

When learning to write unit tests nowadays it’s highly likely that you’ll be taught about using test doubles (or ‘mocking’ if using slang). However, I think it’s rare you’ll be taught or even hear about the notion of sociable unit testing, a term coined by Jay Fields in his book Working Effectively with Unit Tests.

I first heard about this concept from Martin Fowler’s website which is filled with nice snippets of wisdom. I’m not going to re-explain the difference between solitary vs sociable but it summarises into whether you should isolate your class completely using test doubles (solitary) or use the concrete dependencies themselves (sociable).

Have you ever been at a company where perhaps Scrum was ’the process’ then suddenly a new process idea comes along that we need to follow? Teams trying to move to the Spotify model is a good example of this.

I don’t like massive process changes at all. Massive changes seem to be driven by frustration with the current situation and an eagerness to just move to something new. By making sweeping changes like this the problems that are hurting your teams right now don’t get analysed and solved. They get swept away. With a massive change comes a whole new set of problems that your teams will have to deal with.

If you come from writing unit tests after the fact, you might struggle when writing your tests first. A test written after the fact and via TDD don’t have to differ at all. How you write the test will differ significantly.

Everyone does this slightly differently by the way, but here’s how I (mostly) do it.

[Fact]

public void test() {

}

- Sometimes I can’t think of a name straight away, sometimes I can - if I can’t then I name it something stupid. At this point I don’t care.

[Fact]

public void test() {

var result = calculator.Add(5,6);

}

- Now I do my act part of the test. This is where I’m thinking heavily about the API I want to interact with. In this case it’s simple and I can throw the inputs straight in.

If the arguments to the method were complex objects then it’d be different. If they’re new types that I’m creating right now then I’ll create an empty class with the name I want and that’s it - then I’ll initialise one of those objects as a parameter with the default constructor.

I recently had a problem where I couldn’t use TDD. Since November, any C# I’ve written has been with TDD. So, what do you do when you can’t use TDD? I like TDD because I like that feedback loop around my design and around the outputs of my code. The aim is to get as close to that as possible, not to try and religiously follow the 3 laws of TDD at any cost.

This is the first in a series of blog posts from what I’ve learned from practicing TDD from the beginning of a project right until the end. I’ve been doing bits of TDD here and there for over a year, but since mid-November most of the code I have written has been with TDD.

Moq is a tool that can be used in .NET to help you (the developer) create mocks for use in your unit tests. Moq speeds things up if you have to create true mocks as you don’t need to write any of the verification logic. For simple test dummies or stubs, it makes code marginally quicker to write, but it’s not a deal-breaker.

Kent Beck has an excellent idea in his book ‘Extreme Programming Explained’ about how an XP team should play to win rather than playing not to lose.

I think most of us have seen those football matches where the underdog takes the lead in the first half and shocks everyone, only to lose in the 2nd half. In the first half they went all out, but the pressure of maintaining that winning position then compromises their 2nd half.

I think dependency injection (DI) is great but I was thinking about how often I’ve been using it recently and thinking I might be overusing it. DI has become far more popular with all the container frameworks around, but a container isn’t necessary to use the DI pattern, though it is a bit more typing and wiring things together without a container.

Before I started working on projects with a container of some sort I made infrequent use of the DI pattern. Once the container came along I was using it all the time to the point where I didn’t use the new keyword at all. Why? Did I really need all this extra flexibility provided by DI? Was I just using it because it looks clean? Depending on an interface can never be bad right? I was thinking to myself that if I didn’t have this container and had to do ‘pure DI’ - would I be injecting this? Probably not.

I gave a presentation on what continuous integration (CI) is to a group of apprentices during my final week working at Derivco and I was shocked by how little I actually knew about continuous integration. I think continuous integration is one of these things that most teams assume they’re practicing because they happen to be using a tool like TeamCity or Jenkins that screams about its wonderful CI features.

CI is actually much more than merely creating an automatic build with some automated tests attached, but a culture of committing small changes to the mainline everyday. Typically, ‘mainline’ with Git is the master branch, or perhaps some other branch like ‘develop’ depending on how a particular team organises its work.

During one of my interviews recently (I got the job!) I had to give a presentation on a topic I was interested in. I ended up doing a presentation on WebAssembly! I’ve been working with web applications for the past couple of years so WebAssembly was an advancement I was excited for. This post is about what I learned about WebAssembly and why I think it’s an exciting addition to the web.

I’ve yet again decided to start blogging, although I’ve decided to start fresh on this new domain. I must resist the urge to write a ‘how to solve a merge conflict’ post purely for SEO purposes.

The main goal of keeping this blog is that by sharing my opinions here that a) I get better at communicating what I think and b) learn more about topics than I might have if I didn’t write a blog post on it. For anyone who ends up reading my blog I hope anything I write ends up being somewhat more informative than this pretty pointless post.